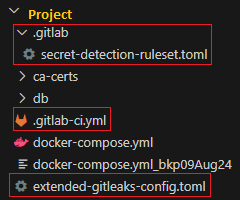

Once you have your GitLab project created. You are required to have the following file structure in it which is highlighted below -

# .gitlab-ci.yml

# See https://docs.gitlab.com/ee/ci/variables/#cicd-variable-precedence include: - template: Jobs/Secret-Detection.gitlab-ci.yml secret_detection: variables: SECRETS_ANALYZER_VERSION: "4.5"

# .gitlab/secret-detection-ruleset.toml # https://docs.gitlab.com/ee/user/application_security/secret_detection/pipeline/index.html#create-a-ruleset-configuration-file [secrets] [[secrets.passthrough]] type = "file" target = "gitleaks.toml" value = "extended-gitleaks-config.toml"

# extended-gitleaks-config.toml # See https://docs.gitlab.com/ee/user/application_security/secret_detection/pipeline/index.html#detecting-complex-strings [extend] # Extends default packaged ruleset, NOTE: do not change the path. path = "/gitleaks.toml" [[rules]] description = "Generic Password Rule" id = "generic-password" regex = '''(?i)(?:pwd|passwd|password)(?:[0-9a-z\-_\t .]{0,20})(?:[\s|']|[\s|"]){0,3}(?:=|>| =:|:{1,3}=|\|\|:|<=|=>|:|\?=)(?:'|\"|\s|=|\x60){0,5}([0-9a-z\-_.=\S_]{3,50})(?:[' |\"|\n|\r|\s|\x60|;]|$)''' entropy = 3.5 keywords = ["pwd", "passwd", "password"] [[rules]] description = "Generic Complex Rule" id = "COMPLEX_PASSWORD" regex = '''(?i)(?:key|api|token|secret|client|passwd|password|PASSWORD|auth|access)(?:[0-9a-z\ -_\t .]{0,20})(?:[\s|']|[\s|"]){0,3}(?:=|>|:{1,3}=|\|\|:|<=|=>|:|\?=)(?:'|\"|\s|=|\ x60){0,5}([0-9a-z\-_.=]{10,150})(?:['|\"|\n|\r|\s|\x60|;]|$)''' severity = "high"

More complex rule can be referred from here - https://github.com/gitleaks/gitleaks/blob/master/config/gitleaks.toml

once you have the following structure, enable GitLab Runner and invoke the pipeline.

In case the runner is not registered, do the following within your project

ref - https://docs.gitlab.com/runner/register/

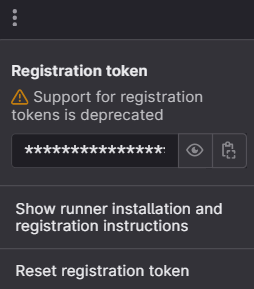

Navigate to GitLab project > settings > CI/CD > expand Runner tab

Click on three dots and retrieve the registration token -

dont have gitlab-runner installed on VM, do the following - $ curl -L "https://packages.gitlab.com/install/repositories/runner/gitlab-runner/script.deb.sh" | sudo bash $ sudo apt install -y gitlab-runner $ gitlab-runner --version $ sudo systemctl status gitlab-runner

If failed to verify public key - NO_PUBKEY 3F01618A51312F3F

Use legacy method - apt-key -

# Remove previous attempts

$ sudo rm -f /usr/share/keyrings/gitlab-runner-archive-keyring.gpg

$ sudo rm -f /etc/apt/sources.list.d/gitlab-runner.list

# Import key using apt-key (legacy)

$ curl -fsSL https://packages.gitlab.com/runner/gitlab-runner/gpgkey | sudo apt-key add -

# Add repo without signed-by

$ echo "deb https://packages.gitlab.com/runner/gitlab-runner/ubuntu jammy main" | sudo tee /etc/apt/sources.list.d/gitlab-runner.list

# Update and install

$ sudo apt update

$ sudo apt install -y gitlab-runner

# verify gitlab-runner $ gitlab-runner -version

Version: 17.10.1

Git revision: ef334dcc

Git branch: 17-10-stable

GO version: go1.23.6 X:cacheprog

Built: 2025-03-26T12:24:33Z

OS/Arch: linux/amd64

Now start registering runners$ gitlab-runner register Runtime platform arch=amd64 os=linux pid=927974 revision=853330f9 version=16.5.0 Running in system-mode. Enter the GitLab instance URL (for example, https://gitlab.com/): https://gitlab.company.domain.com/ Enter the registration token: GR13489416BxxxxAG2y_9-ysB_tdR Enter a description for the runner: [gitrunnerinstance01]: myproject-runner Enter tags for the runner (comma-separated): Enter optional maintenance note for the runner: WARNING: Support for registration tokens and runner parameters in the 'register' command has been deprecated in GitLab Runner 15.6 and will be replaced with support for authentication tokens. For more information, see https://docs.gitlab.com/ee/ci/runners/new_creation_workflow Registering runner... succeeded runner=GR13489416BtsyuAG Enter an executor: shell, ssh, docker-autoscaler, docker+machine, kubernetes, custom, docker, docker-windows, parallels, virtualbox, instance: docker Enter the default Docker image (for example, ruby:2.7): gcr.io/kaniko-project/executor Runner registered successfully. Feel free to start it, but if it's running already the config should be automatically reloaded! Configuration (with the authentication token) was saved in "/etc/gitlab-runner/config.toml"

leak report showing detected secrets -